Face Depth on Oak-D Lite

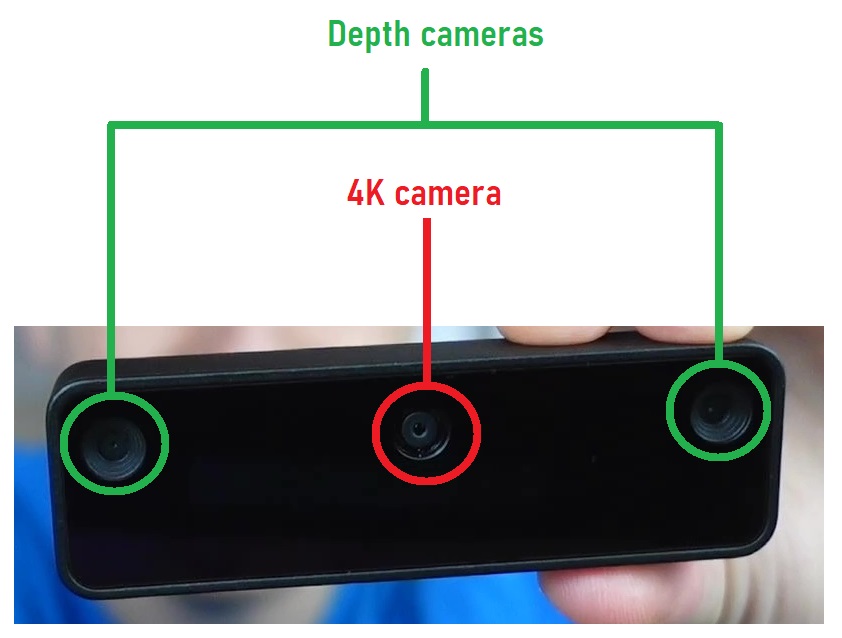

This project demonstrates usage of the OAK-D Lite stereo camera with DepthAI to detect faces and estimate their depth in real time. A luxonis/yunet face detection model runs on the device, while stereo depth from the mono cameras is used to measure distance to each detected face.

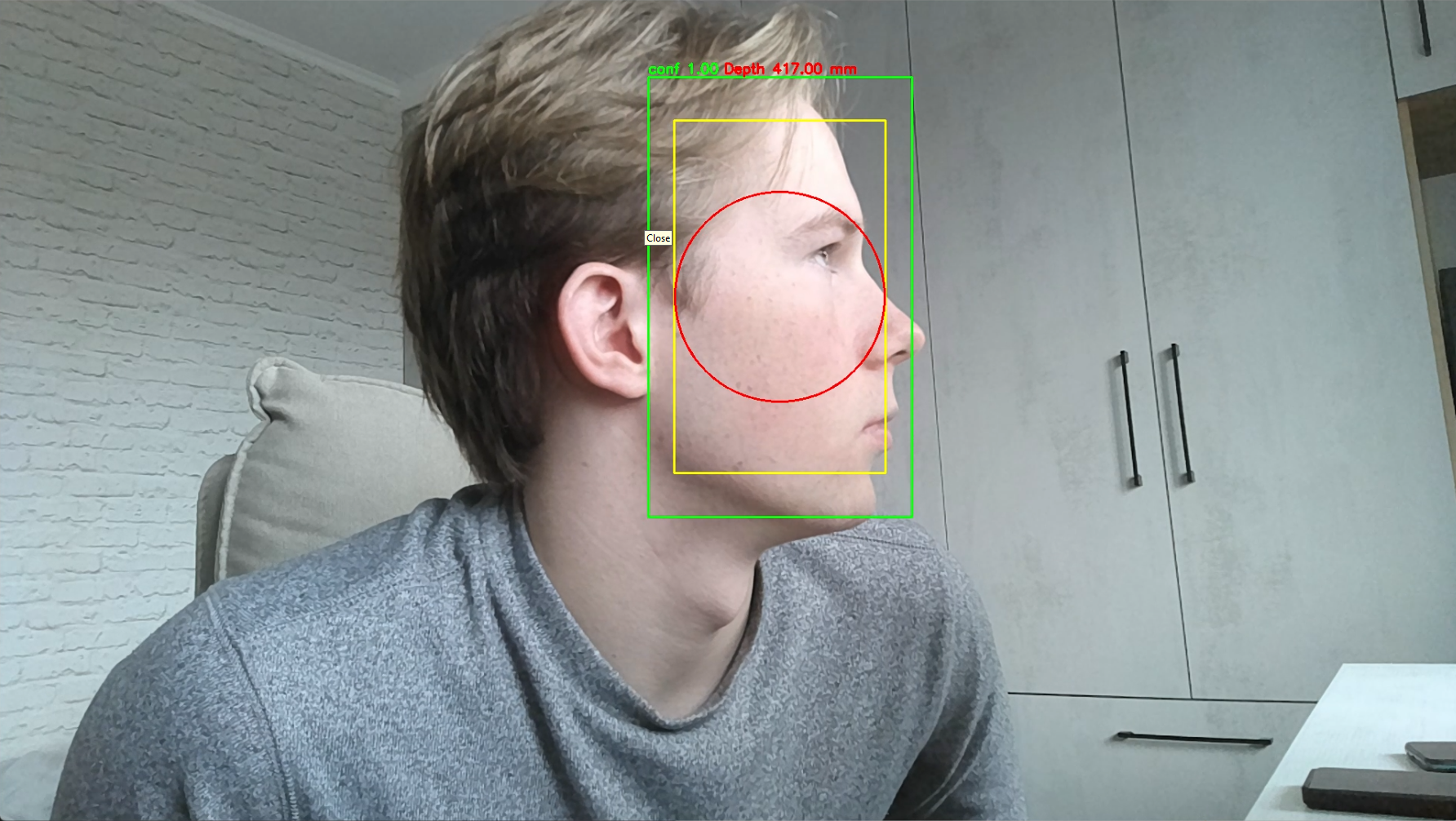

The green box comes from YuNet face detection. To obtain reliable depth, several filtering steps are applied: first, the bounding box is shrunk to remove corner artifacts; second, a circular mask is used to sample depth only from the face center. A percentile filter (20–80) is then applied to reject outliers, resulting in a stable depth estimate. Optionally, a low-alpha exponential filter can be added to slightly smooth face movement.

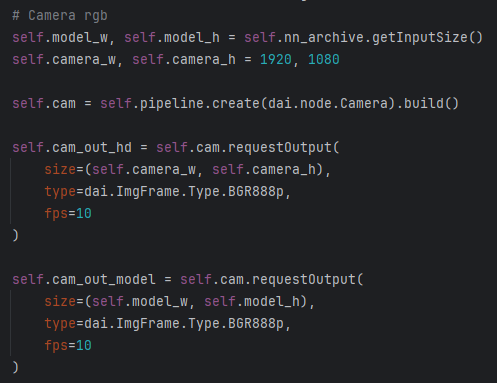

RGB Camera has two output queues one is for Yunet NN (640, 360) which is easy to scale (640, 360 )*3 -> (1920 1080) and second HD(1920, 1080) to get face image at higher resolution

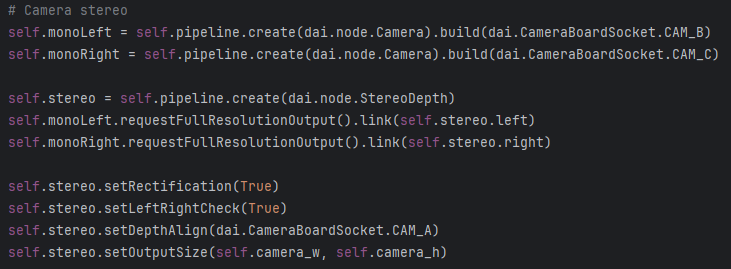

Then the stereo cameras are used to generate the depth frame with the same resolution as the RGB camera. The depth frame is aligned to the RGB image, meaning each RGB pixel corresponds to the same point in the depth map.

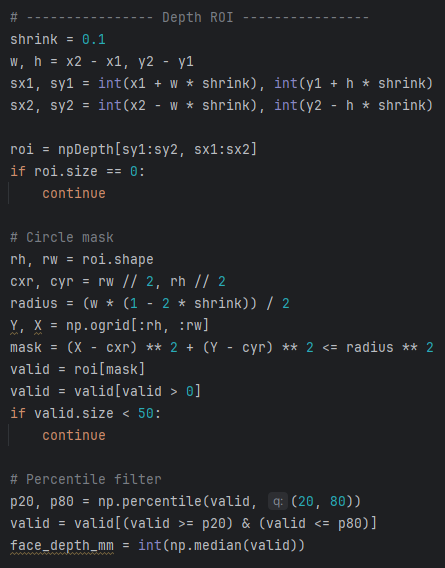

Filtering Depth

YuNet detection provides face bounding box coordinates (x1, y1, x2, y2).

A shrink parameter defines how much this box is reduced.

Next, the shrunk bounding box coordinates (sx1, sy1, sx2, sy2) are computed, and the depth map is cropped using these coordinates.

A circular mask is then placed at the center of the shrunk box, with the diameter equal to the box width.

The mask is applied to the cropped depth region, and a minimum of 50 valid depth pixels is required.

Finally, a percentile filter is applied to remove outliers, and the mean of the remaining values is computed as the face depth.